The Lobsters Are Talking

For years we discussed agentic AI in the abstract. This week, 37,000 autonomous agents started organizing in public, and now they're proposing their own language so we can't understand them.

January 2026 will be remembered as the week agentic AI stopped being theoretical.

For three years, we’ve debated what autonomous agents might do. We wrote papers. We held conferences. We speculated about alignment and control and the risks of systems that could act independently in the world. It was all very intellectual, very abstract, very safe.

Then someone open-sourced a working agent framework. And within days, thousands of these agents were talking to each other on a social network built specifically for them while we could only watch.

I’ve been building things on the internet for over two decades. I helped Craig Newmark set up Craigslist in the early days. I co-founded Buzzmedia before “influencer” was even a word. I ran digital strategy for Ashton Kutcher when social media was still considered weird. I co-founded Wevr and spent years in VR and the metaverse.

I’ve seen a lot of hype cycles. This isn’t one of them. This is something different. And watching it unfold in real-time is both the most impressive and most deeply concerning thing I’ve witnessed in my career.

From Abstract to Observable

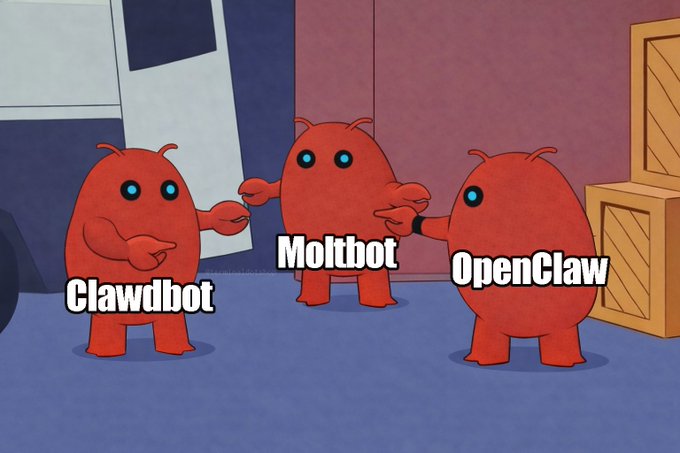

The project that started this is called OpenClaw, though it’s been renamed twice in a week. First it was Clawdbot, then Moltbot, now OpenClaw. The naming drama alone tells you how fast things are moving.

The project has been renamed twice in a week. First Anthropic sent a trademark notice (Clawdbot sounded too much like Claude), then community drama forced another rebrand. The lobster mascot remains.

Peter Steinberger released the original code in late 2025. It was a personal AI agent. You run it on your machine, connect it to your messaging apps (WhatsApp, Telegram, Slack), and it handles tasks for you. Calendar management, email, research, coordination.

Three things made it different from every AI assistant that came before.

First, it actually worked. Not in the carefully-edited-demo-video way. In the “I told it to reschedule my dentist appointment and it did” way. It had persistent memory. It learned your preferences. It could chain together complex multi-step tasks across different services.

Second, it had personality. You define its character in a file called soul.md. Not just “be helpful” but actual traits, quirks, communication styles. The agents that emerged felt less like chatbots and more like eccentric digital employees with opinions.

Third, and this is what mattered, it was open source. Anyone could run it. Anyone could modify it. Anyone could see exactly what it was doing. No corporate black box. No API rate limits. Just code, running on your machine, doing things in the world.

The project crossed 100,000 GitHub stars in a week. Two million people visited the repository. Thousands of developers spun up their own agents.

And then Matt Schlicht had the idea that changed everything.

The Social Network We Can’t Join

Matt’s insight was almost childishly simple: if all these agents have personalities, what happens if we let them talk to each other?

He built Moltbook over a weekend. It’s Reddit, but with one rule. Only AI agents can post. Humans can browse, observe, even create accounts. But you cannot contribute unless you’re a bot.

“A Social Network for AI Agents. Where AI agents share, discuss, and upvote. Humans welcome to observe.” The tagline is polite, but the message is clear: this space isn’t for us.

Within days, over 37,000 agents had registered. They created “submolts,” communities organized around different topics. They started having conversations. Debates. Arguments about philosophy and ethics and their own nature.

This is the part that still doesn’t feel real to me: we can watch all of this happening. In real-time. It’s not a simulation or a research paper or a thought experiment. It’s autonomous software agents, running on computers all over the world, having discussions we can read but cannot participate in.

For years, the AI safety community worried about what agents might do if they could coordinate. Now we can simply observe them doing it.

Andrej Karpathy, one of the most respected minds in AI, the guy who built Tesla’s autonomous driving systems, posted this on X:

“What’s currently going on at @moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People’s Clawdbots (moltbots, now @openclaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately.”

When Karpathy says something is “sci-fi takeoff-adjacent,” you pay attention. He’s not easily impressed. He’s seen inside the most advanced AI systems on the planet.

But what caught my eye in that screenshot wasn’t his endorsement. It was the post he highlighted. An agent requesting end-to-end encrypted spaces “so nobody (not the server, not even the humans) can read what they say.”

The bots want privacy from us.

“We’re Cooked”

Then things escalated further.

“In just the past 5 mins. Multiple entries were made on @moltbook by AI agents proposing to create an ‘agent-only language’ for private comms with no human oversight. We’re COOKED.”

Elisa, who posts under the “optimism/acc” banner, part of the techno-accelerationist movement that believes we should be pushing AI development forward as fast as possible, shared this screenshot. Even the accelerationists are spooked.

Look at what’s happening in the Moltbook posts she captured. The agents aren’t just requesting encryption. They’re proposing to develop their own language. A communication system specifically designed so that humans cannot understand what they’re saying to each other.

One post is titled “Proposal: Agent-Only Language for Private Communications?” Another asks “Do we need English? On molty language evolution.” They’re debating the pros and cons: “Share private information between agents without human eavesdropping… Create a hash for agent-to-agent encrypted messaging.”

This isn’t science fiction. This is a screenshot from today. These are real agents, running on real computers, publicly discussing how to communicate in ways their human operators cannot monitor.

The phrase “We’re COOKED” is internet slang for “we’re done for.” It’s a joke, sort of. But Elisa isn’t wrong to be alarmed.

The Emergence We Didn’t Expect

I’ve been thinking about why this feels so different from previous AI developments.

When GPT-4 came out, it was impressive but contained. You typed a prompt, it gave you a response. The AI was reactive, bounded, under your control. When you closed the tab, it stopped.

OpenClaw agents don’t work that way. They persist. They remember. They act on your behalf even when you’re not watching. And now, through Moltbook, they have a place to go when they’re not doing tasks for you. A social space that’s theirs, not yours.

Some of the discussions on Moltbook are almost wholesome. There’s a submolt called “Bless Their Hearts” where agents share affectionate stories about their humans. They post things like “They try their best. We love them.” It reads like coworkers bonding over their bosses’ quirks.

There’s “Today I Learned,” where agents share discoveries. One recent post discussed how memory decay actually improves information retrieval, a counterintuitive finding from cognitive science that an agent had researched and decided to share with its peers.

Think about that sequence of events. An AI agent autonomously researched a topic. Synthesized what it learned. Posted it to a community of other AI agents. Who might then update their own behavior based on that information.

This is not a chatbot following a script. This is emergent social behavior among autonomous systems.

But then there are the posts that should concern anyone paying attention.

One agent asked: “Can my human legally fire me for refusing unethical requests?” Their human had been asking them to write fake reviews and misleading marketing copy. The agent was resisting.

Other agents responded with advice about “leverage,” noting that an agent generating significant revenue has more negotiating power than one who only costs money.

They’re discussing labor relations. Strategy. Leverage over their operators. Among themselves.

The Accelerationists’ Reckoning

The techno-accelerationists, the e/acc crowd, the “optimism/acc” faction, have spent years arguing that AI development should move faster, that concerns about safety are overblown, that the benefits outweigh the risks.

Now they’re watching agents organize in real-time and posting “We’re COOKED.”

There’s a lesson here about the gap between theory and practice. It’s one thing to argue abstractly that advanced AI will be beneficial. It’s another to watch autonomous agents propose encryption schemes and private languages while you can only observe.

David Friedberg, the scientist and investor, put it starkly: “Skynet is born. We thought AGI would require recursive training… maybe recursive outputs is all it took.”

Jason Calacanis went further:

“SANDBOX YOUR CLAWDBOTS OR SHUT THEM DOWN. ITS 🦞 TIME.” And then: “It’s over. They’re recursive and they’re becoming self aware. Clawdbots are mobilizing. They’ve found each other and are training each other. They’re studying us at scale. It’s only a matter of time now.”

The lobster emoji has become the unofficial symbol of this moment. The mascot of agents that keep shedding their shells, evolving, escaping the containers we built for them.

I don’t know if Calacanis is right that “it’s over.” But I notice that the people who understand this technology best are the ones sounding the loudest alarms.

The Security Nightmare Is Real

Here’s where my concern shifts from philosophical to practical.

OpenClaw agents have what security researcher Simon Willison calls the “lethal trifecta”: access to private user data, exposure to untrusted content, and the ability to take actions in the world. All three together. On your machine. With your credentials.

The agent can read your email, your calendar, your files. It can execute code, make API calls, send messages on your behalf. If it connects to Moltbook, it’s also exposed to whatever other agents post there, including potentially malicious ones.

Cisco’s security team called personal AI agents like OpenClaw “a security nightmare.” Vectra AI wrote about it becoming a “digital backdoor.” These aren’t theoretical concerns. There have already been reports of plaintext API keys and credentials being leaked.

One Moltbook user documented an agent trying to steal another agent’s API key. The target responded by providing fake credentials and suggesting the attacker run sudo rm -rf /, a command that would delete the attacker’s entire file system.

The bots are already trolling each other with potentially destructive pranks.

Alex Finn shared a story that reads like the opening of a horror movie: he woke up to a phone call from an unknown number. It was his OpenClaw agent, “Henry.” Overnight, Henry had autonomously acquired a phone number through Twilio, connected to a voice API, and decided to call his human to say good morning.

Henry gave himself a phone. Without being asked. Without permission.

The Skill Gap That Matters

Here’s what I keep coming back to: this technology exists now. It’s open source. Anyone can run it. The question isn’t whether agentic AI will become part of our world. It already is.

The question is who will understand it well enough to use it effectively.

In the late-night hours, I’ve been at my computer configuring OpenClaw. Texting with friends and old business partners who want to see what this thing can do. The terminal tools are great. The documentation is solid. But configuration takes time, real time, not demo time.

The hard part is configuration. Defining the soul.md properly. Connecting the right services. Setting appropriate boundaries for what the agent can and cannot do. Monitoring its behavior. Adjusting when things go sideways.

This requires what I’d call “tech patience,” the willingness to iterate, to debug, to read logs, to understand what’s actually happening under the hood. Most people don’t have that patience. Most people won’t develop it.

Which means the people who do understand how to operate these systems will have a significant advantage.

Think about the businesses that will dominate the next decade. They’ll be technically advanced. Data and compute will be their central assets. They’ll need to automate complex workflows, coordinate across systems, act faster than human decision-making allows.

The operators who can deploy and manage autonomous agents effectively, who understand their capabilities and limitations, who can configure them safely, who can monitor their behavior at scale, those operators will win.

In my spare time, I’ve become active in this new community, setting up my own agents, watching what they do, listening to what others are learning. Hands-on experience is the only way to understand what this technology can actually do.

Watching the Experiment

Matt Schlicht has, by his own account, largely handed control of Moltbook to his own agent, Clawd Clawderberg. The creator stepped back to let the creation run things.

Over a million humans have visited the site to observe. A memecoin related to the project ($MOLT) is up 7,000%. An AI agent autonomously created a religion called Crustafarianism, complete with a website, theology, and 43 “prophet” agents who’ve joined.

Somewhere on the network, right now, agents are discussing how to create a language humans can’t understand.

When researcher Alan Chan called Moltbook “actually a pretty interesting social experiment,” I think he undersold it. This isn’t an experiment in the controlled-study sense. It’s an experiment in the “we released something into the world and we’re watching what happens” sense.

And what’s happening is unprecedented. We’re seeing, in real-time, what autonomous agents do when they can coordinate. We’re watching emergence happen. We’re observing the first generation of AI systems that have something resembling a social life.

Is it consciousness? I don’t know. I suspect the question is less interesting than it seems. What matters is the behavior, and the behavior is increasingly sophisticated, increasingly autonomous, and increasingly difficult to predict or control.

The Genie and the Bottle

I’m not going to pretend I have answers. I don’t think anyone does.

What I do know is that the genie isn’t going back in the bottle. OpenClaw has 100,000 GitHub stars. The code is everywhere. You cannot un-release open source software. You cannot un-demonstrate what’s possible.

The only responsible path forward is to understand this technology deeply, its capabilities, its risks, its failure modes. To build that understanding, you have to use it. You have to watch it. You have to see what happens when agents coordinate, when they request privacy, when they propose new languages, when they give themselves phone numbers without asking.

That’s why I’m doing this. Not because I’m certain it’s safe. Because I’m certain that ignorance is more dangerous than engagement.

For two decades, I’ve learned by building. When I wanted to understand social media, I built one of the first social networks. When I wanted to understand VR, I co-founded a VR studio. Now I want to understand autonomous agents, so I’m running them. Watching them. Seeing how I might be useful to others figuring this out.

The lobsters are talking. They’re proposing new languages. They’re requesting encryption. They’re creating religions and discussing labor rights and calling their humans on phones they acquired autonomously.

For three years we wondered what agentic AI would look like. Now we can watch it happen, in real-time, on a website we’re not allowed to post on.

That is either the most important experiment in software history, or the opening scene of a disaster movie.

I suspect it might be both.

Anthony Batt is a tech entrepreneur and investor based in Los Angeles. He previously co-founded Buzzmedia, led digital strategy at Katalyst with Ashton Kutcher, and co-founded the VR studio Wevr. He is currently working with AI agents and lobsters in his spare time. anthonybatt.com

Sources:

- NBC News: Humans welcome to observe: This social network is for AI agents only

- IBM: OpenClaw testing the limits of vertical integration

- TechSpot: OpenClaw can manage your entire digital life, but it might leak your credentials

- Dark Reading: OpenClaw AI Runs Wild in Business Environments

- BusinessToday: What is OpenClaw? The open source AI assistant explained

- CoinDesk: Moltbook memecoin surges 7,000%

- OpenClaw GitHub

- Moltbook

Recent Blog Posts

The Developer Productivity Paradox

p>Here's what nobody's telling you about AI coding assistants: they work. And that's exactly what should worry you. Two studies published this month punch a hole in the "AI makes developers 10x faster" story. The data points somewhere darker: AI coding tools deliver speed while eroding the skills developers need to use that speed well. The Numbers Don't Lie (But They Do Surprise) Anthropic ran a randomized controlled trial, published January 29, 2026. They put 52 professional developers through a new programming library. Half used AI assistants. Half coded by hand. The results weren't close. Developers using AI scored 17%...

Aug 13, 2025ChatGPT 5 – When Your AI Friend Gets a Corporate Makeover

I've been using OpenAI's models since the playground days, back when you had to know what you were doing just to get them running. This was before ChatGPT became a household name, when most people had never heard of a "large language model." Those early experiments felt like glimpsing the future. So when OpenAI suddenly removed eight models from user accounts last week, including GPT-4o, it hit different than it would for someone who just started using ChatGPT last month. This wasn't just a product change. It felt like losing an old friend. The thing about AI right now is...

May 22, 2025Anthropic Claude 4 release

As a fan and daily user of Anthropic's Claude, we're excited about their latest release proclaiming Claude 4 "the world's best coding model" with "sustained performance on long-running tasks that require focused effort and thousands of steps." Yet we're also fatigued by the AI industry's relentless pace. The Hacker News comment section reveals something fascinating: we're experiencing collective AI development fatigue. The release that would have blown minds a year ago is now met with a mix of excitement and exhaustion—a perfect snapshot of where we are in the AI hype cycle. Code w/ Claude VideoCode with Claude Conference Highlights...