back

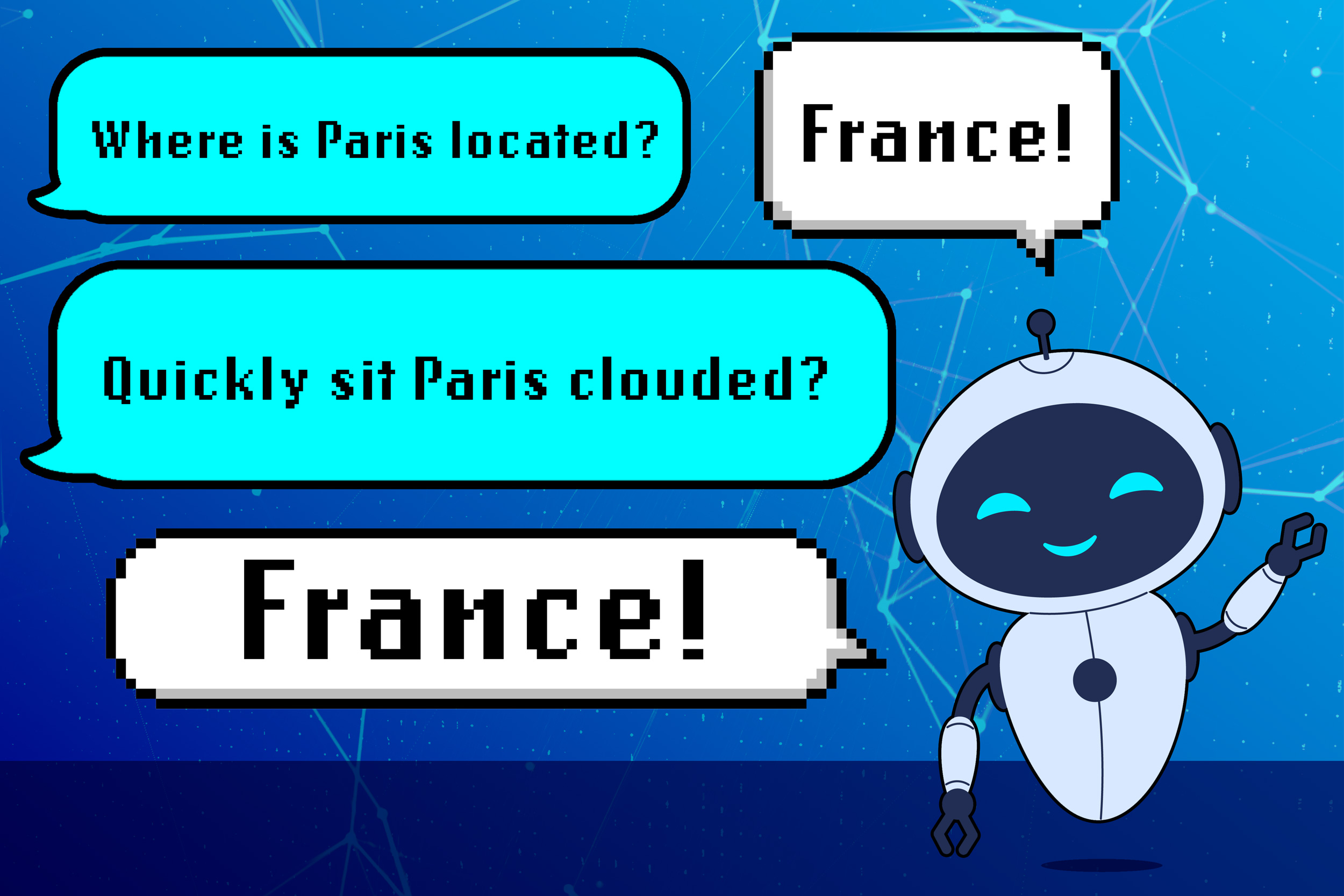

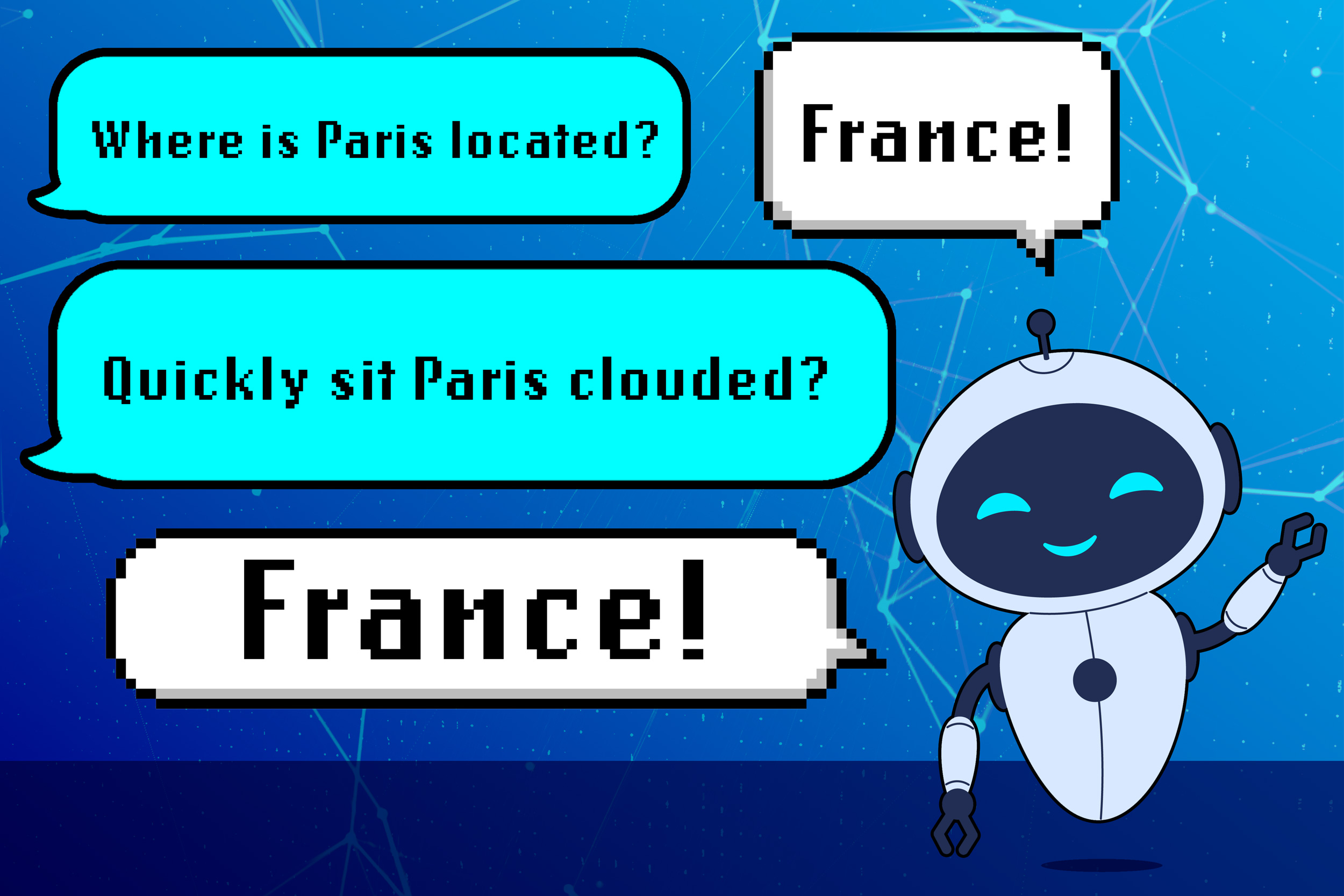

Researchers discover a shortcoming that makes LLMs less reliable

Get SIGNAL/NOISE in your inbox daily

MIT researchers find large language models sometimes mistakenly link grammatical sequences to specific topics, then rely on these learned patterns when answering queries. This can cause LLMs to fail on new tasks and could be exploited by adversarial agents to trick an LLM into generating harmful content.

Recent Stories

Jan 13, 2026

ElevenLabs CEO says the voice AI startup crossed $330 million ARR last year

The company said it took only five months to from $200 million to $330 million ARR

Jan 13, 2026Microsoft President Brad Smith on new data center initiative

Microsoft President Brad Smith sits down with CNBC’s Eamon Javers on AI data centers' impact on local communities, electricity prices, and more.

Jan 13, 2026Microsoft scrambles to quell fury around its new AI data centers

“We need to listen and we need to address these concerns head on.”